Initial commit

1

.github/FUNDING.yml

vendored

Normal file

|

|

@ -0,0 +1 @@

|

|||

github: ryanheise

|

||||

48

.github/ISSUE_TEMPLATE/bug_report.md

vendored

Normal file

|

|

@ -0,0 +1,48 @@

|

|||

---

|

||||

name: Bug report

|

||||

about: Create a report to help us improve

|

||||

title: ''

|

||||

labels: 1 backlog, bug

|

||||

assignees: ryanheise

|

||||

|

||||

---

|

||||

|

||||

<!-- ALL SECTIONS BELOW MUST BE COMPLETED -->

|

||||

**Which API doesn't behave as documented, and how does it misbehave?**

|

||||

Name here the specific methods or fields that are not behaving as documented, and explain clearly what is happening.

|

||||

|

||||

**Minimal reproduction project**

|

||||

Provide a link here using one of two options:

|

||||

1. Fork this repository and modify the example to reproduce the bug, then provide a link here.

|

||||

2. If the unmodified official example already reproduces the bug, just write "The example".

|

||||

|

||||

**To Reproduce (i.e. user steps, not code)**

|

||||

Steps to reproduce the behavior:

|

||||

1. Go to '...'

|

||||

2. Click on '....'

|

||||

3. Scroll down to '....'

|

||||

4. See error

|

||||

|

||||

**Error messages**

|

||||

|

||||

```

|

||||

If applicable, copy & paste error message here, within the triple quotes to preserve formatting.

|

||||

```

|

||||

|

||||

**Expected behavior**

|

||||

A clear and concise description of what you expected to happen.

|

||||

|

||||

**Screenshots**

|

||||

If applicable, add screenshots to help explain your problem.

|

||||

|

||||

**Runtime Environment (please complete the following information if relevant):**

|

||||

- Device: [e.g. Samsung Galaxy Note 8]

|

||||

- OS: [e.g. Android 8.0.0]

|

||||

|

||||

**Flutter SDK version**

|

||||

```

|

||||

insert output of "flutter doctor" here

|

||||

```

|

||||

|

||||

**Additional context**

|

||||

Add any other context about the problem here.

|

||||

8

.github/ISSUE_TEMPLATE/config.yml

vendored

Normal file

|

|

@ -0,0 +1,8 @@

|

|||

blank_issues_enabled: false

|

||||

contact_links:

|

||||

- name: Community Support

|

||||

url: https://stackoverflow.com/search?q=audio_service

|

||||

about: Ask for help on Stack Overflow.

|

||||

- name: New to Flutter?

|

||||

url: https://gitter.im/flutter/flutter

|

||||

about: Chat with other Flutter developers on Gitter.

|

||||

39

.github/ISSUE_TEMPLATE/documentation-request.md

vendored

Normal file

|

|

@ -0,0 +1,39 @@

|

|||

---

|

||||

name: Documentation request

|

||||

about: Suggest an improvement to the documentation

|

||||

title: ''

|

||||

labels: 1 backlog, documentation

|

||||

assignees: ryanheise

|

||||

|

||||

---

|

||||

|

||||

<!--

|

||||

|

||||

PLEASE READ CAREFULLY!

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

FOR YOUR DOCUMENTATION REQUEST TO BE PROCESSED, YOU WILL NEED

|

||||

TO FILL IN ALL SECTIONS BELOW. DON'T DELETE THE HEADINGS.

|

||||

|

||||

|

||||

THANK YOU :-D

|

||||

|

||||

|

||||

-->

|

||||

|

||||

**To which pages does your suggestion apply?**

|

||||

|

||||

- Direct URL 1

|

||||

- Direct URL 2

|

||||

- ...

|

||||

|

||||

**Quote the sentences(s) from the documentation to be improved (if any)**

|

||||

|

||||

> Insert here. (Skip if you are proposing an entirely new section.)

|

||||

|

||||

**Describe your suggestion**

|

||||

|

||||

...

|

||||

38

.github/ISSUE_TEMPLATE/feature_request.md

vendored

Normal file

|

|

@ -0,0 +1,38 @@

|

|||

---

|

||||

name: Feature request

|

||||

about: Suggest an idea for this project

|

||||

title: ''

|

||||

labels: 1 backlog, enhancement

|

||||

assignees: ryanheise

|

||||

|

||||

---

|

||||

|

||||

<!--

|

||||

|

||||

PLEASE READ CAREFULLY!

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

FOR YOUR FEATURE REQUEST TO BE PROCESSED, YOU WILL NEED

|

||||

TO FILL IN ALL SECTIONS BELOW. DON'T DELETE THE HEADINGS.

|

||||

|

||||

|

||||

THANK YOU :-D

|

||||

|

||||

|

||||

-->

|

||||

|

||||

|

||||

**Is your feature request related to a problem? Please describe.**

|

||||

A clear and concise description of what the problem is. Ex. I'm always frustrated when [...]

|

||||

|

||||

**Describe the solution you'd like**

|

||||

A clear and concise description of what you want to happen.

|

||||

|

||||

**Describe alternatives you've considered**

|

||||

A clear and concise description of any alternative solutions or features you've considered.

|

||||

|

||||

**Additional context**

|

||||

Add any other context or screenshots about the feature request here.

|

||||

19

.github/ISSUE_TEMPLATE/frequently-asked-questions.md

vendored

Normal file

|

|

@ -0,0 +1,19 @@

|

|||

---

|

||||

name: Frequently Asked Questions

|

||||

about: Suggest a new question for the Wiki FAQ

|

||||

title: ''

|

||||

labels: 1 backlog, question

|

||||

assignees: ryanheise

|

||||

|

||||

---

|

||||

|

||||

## Checklist

|

||||

|

||||

<!-- Replace [ ] with [x] to confirm an item in the checklist -->

|

||||

|

||||

- [ ] The question is not already in the FAQ.

|

||||

- [ ] The question is not too narrow or specific to a particular application.

|

||||

|

||||

## Suggested Question

|

||||

|

||||

Write the question here.

|

||||

12

.github/workflows/auto-close.yml

vendored

Normal file

|

|

@ -0,0 +1,12 @@

|

|||

name: Autocloser

|

||||

on: [issues]

|

||||

jobs:

|

||||

autoclose:

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- name: Autoclose issues that did not follow issue template

|

||||

uses: roots/issue-closer-action@v1.1

|

||||

with:

|

||||

repo-token: ${{ secrets.GITHUB_TOKEN }}

|

||||

issue-close-message: "This issue was automatically closed because it did not follow the issue template."

|

||||

issue-pattern: "Which API(.|[\\r\\n])*Minimal reproduction project(.|[\\r\\n])*To Reproduce|To which pages(.|[\\r\\n])*Describe your suggestion|Is your feature request(.|[\\r\\n])*Describe the solution you'd like"

|

||||

19

.gitignore

vendored

Normal file

|

|

@ -0,0 +1,19 @@

|

|||

.DS_Store

|

||||

.dart_tool/

|

||||

|

||||

.packages

|

||||

.pub/

|

||||

pubspec.lock

|

||||

|

||||

build/

|

||||

|

||||

doc/

|

||||

**/ios/Flutter/flutter_export_environment.sh

|

||||

android/.project

|

||||

example/android/.project

|

||||

android/.classpath

|

||||

android/.settings/org.eclipse.buildship.core.prefs

|

||||

example/android/.settings/org.eclipse.buildship.core.prefs

|

||||

example/android/app/.classpath

|

||||

example/android/app/.project

|

||||

example/android/app/.settings/org.eclipse.buildship.core.prefs

|

||||

2

.idea/.gitignore

generated

vendored

Normal file

|

|

@ -0,0 +1,2 @@

|

|||

# Project exclude paths

|

||||

/.

|

||||

116

.idea/codeStyles/Project.xml

generated

Normal file

|

|

@ -0,0 +1,116 @@

|

|||

<component name="ProjectCodeStyleConfiguration">

|

||||

<code_scheme name="Project" version="173">

|

||||

<codeStyleSettings language="XML">

|

||||

<indentOptions>

|

||||

<option name="CONTINUATION_INDENT_SIZE" value="4" />

|

||||

</indentOptions>

|

||||

<arrangement>

|

||||

<rules>

|

||||

<section>

|

||||

<rule>

|

||||

<match>

|

||||

<AND>

|

||||

<NAME>xmlns:android</NAME>

|

||||

<XML_ATTRIBUTE />

|

||||

<XML_NAMESPACE>^$</XML_NAMESPACE>

|

||||

</AND>

|

||||

</match>

|

||||

</rule>

|

||||

</section>

|

||||

<section>

|

||||

<rule>

|

||||

<match>

|

||||

<AND>

|

||||

<NAME>xmlns:.*</NAME>

|

||||

<XML_ATTRIBUTE />

|

||||

<XML_NAMESPACE>^$</XML_NAMESPACE>

|

||||

</AND>

|

||||

</match>

|

||||

<order>BY_NAME</order>

|

||||

</rule>

|

||||

</section>

|

||||

<section>

|

||||

<rule>

|

||||

<match>

|

||||

<AND>

|

||||

<NAME>.*:id</NAME>

|

||||

<XML_ATTRIBUTE />

|

||||

<XML_NAMESPACE>http://schemas.android.com/apk/res/android</XML_NAMESPACE>

|

||||

</AND>

|

||||

</match>

|

||||

</rule>

|

||||

</section>

|

||||

<section>

|

||||

<rule>

|

||||

<match>

|

||||

<AND>

|

||||

<NAME>.*:name</NAME>

|

||||

<XML_ATTRIBUTE />

|

||||

<XML_NAMESPACE>http://schemas.android.com/apk/res/android</XML_NAMESPACE>

|

||||

</AND>

|

||||

</match>

|

||||

</rule>

|

||||

</section>

|

||||

<section>

|

||||

<rule>

|

||||

<match>

|

||||

<AND>

|

||||

<NAME>name</NAME>

|

||||

<XML_ATTRIBUTE />

|

||||

<XML_NAMESPACE>^$</XML_NAMESPACE>

|

||||

</AND>

|

||||

</match>

|

||||

</rule>

|

||||

</section>

|

||||

<section>

|

||||

<rule>

|

||||

<match>

|

||||

<AND>

|

||||

<NAME>style</NAME>

|

||||

<XML_ATTRIBUTE />

|

||||

<XML_NAMESPACE>^$</XML_NAMESPACE>

|

||||

</AND>

|

||||

</match>

|

||||

</rule>

|

||||

</section>

|

||||

<section>

|

||||

<rule>

|

||||

<match>

|

||||

<AND>

|

||||

<NAME>.*</NAME>

|

||||

<XML_ATTRIBUTE />

|

||||

<XML_NAMESPACE>^$</XML_NAMESPACE>

|

||||

</AND>

|

||||

</match>

|

||||

<order>BY_NAME</order>

|

||||

</rule>

|

||||

</section>

|

||||

<section>

|

||||

<rule>

|

||||

<match>

|

||||

<AND>

|

||||

<NAME>.*</NAME>

|

||||

<XML_ATTRIBUTE />

|

||||

<XML_NAMESPACE>http://schemas.android.com/apk/res/android</XML_NAMESPACE>

|

||||

</AND>

|

||||

</match>

|

||||

<order>ANDROID_ATTRIBUTE_ORDER</order>

|

||||

</rule>

|

||||

</section>

|

||||

<section>

|

||||

<rule>

|

||||

<match>

|

||||

<AND>

|

||||

<NAME>.*</NAME>

|

||||

<XML_ATTRIBUTE />

|

||||

<XML_NAMESPACE>.*</XML_NAMESPACE>

|

||||

</AND>

|

||||

</match>

|

||||

<order>BY_NAME</order>

|

||||

</rule>

|

||||

</section>

|

||||

</rules>

|

||||

</arrangement>

|

||||

</codeStyleSettings>

|

||||

</code_scheme>

|

||||

</component>

|

||||

19

.idea/libraries/Dart_SDK.xml

generated

Normal file

|

|

@ -0,0 +1,19 @@

|

|||

<component name="libraryTable">

|

||||

<library name="Dart SDK">

|

||||

<CLASSES>

|

||||

<root url="file:///home/ryan/opt/flutter/bin/cache/dart-sdk/lib/async" />

|

||||

<root url="file:///home/ryan/opt/flutter/bin/cache/dart-sdk/lib/collection" />

|

||||

<root url="file:///home/ryan/opt/flutter/bin/cache/dart-sdk/lib/convert" />

|

||||

<root url="file:///home/ryan/opt/flutter/bin/cache/dart-sdk/lib/core" />

|

||||

<root url="file:///home/ryan/opt/flutter/bin/cache/dart-sdk/lib/developer" />

|

||||

<root url="file:///home/ryan/opt/flutter/bin/cache/dart-sdk/lib/html" />

|

||||

<root url="file:///home/ryan/opt/flutter/bin/cache/dart-sdk/lib/io" />

|

||||

<root url="file:///home/ryan/opt/flutter/bin/cache/dart-sdk/lib/isolate" />

|

||||

<root url="file:///home/ryan/opt/flutter/bin/cache/dart-sdk/lib/math" />

|

||||

<root url="file:///home/ryan/opt/flutter/bin/cache/dart-sdk/lib/mirrors" />

|

||||

<root url="file:///home/ryan/opt/flutter/bin/cache/dart-sdk/lib/typed_data" />

|

||||

</CLASSES>

|

||||

<JAVADOC />

|

||||

<SOURCES />

|

||||

</library>

|

||||

</component>

|

||||

15

.idea/libraries/Flutter_Plugins.xml

generated

Normal file

|

|

@ -0,0 +1,15 @@

|

|||

<component name="libraryTable">

|

||||

<library name="Flutter Plugins" type="FlutterPluginsLibraryType">

|

||||

<CLASSES>

|

||||

<root url="file://$USER_HOME$/flutter/.pub-cache/hosted/pub.dartlang.org/flutter_isolate-1.0.0+14" />

|

||||

<root url="file://$USER_HOME$/flutter/.pub-cache/hosted/pub.dartlang.org/sqflite-1.3.1+1" />

|

||||

<root url="file://$USER_HOME$/flutter/.pub-cache/hosted/pub.dartlang.org/audio_session-0.0.7" />

|

||||

<root url="file://$USER_HOME$/flutter/.pub-cache/hosted/pub.dartlang.org/path_provider_macos-0.0.4+4" />

|

||||

<root url="file://$PROJECT_DIR$" />

|

||||

<root url="file://$USER_HOME$/flutter/.pub-cache/hosted/pub.dartlang.org/path_provider-1.6.14" />

|

||||

<root url="file://$USER_HOME$/flutter/.pub-cache/hosted/pub.dartlang.org/path_provider_linux-0.0.1+2" />

|

||||

</CLASSES>

|

||||

<JAVADOC />

|

||||

<SOURCES />

|

||||

</library>

|

||||

</component>

|

||||

9

.idea/libraries/Flutter_for_Android.xml

generated

Normal file

|

|

@ -0,0 +1,9 @@

|

|||

<component name="libraryTable">

|

||||

<library name="Flutter for Android">

|

||||

<CLASSES>

|

||||

<root url="jar:///home/ryan/opt/flutter/bin/cache/artifacts/engine/android-arm/flutter.jar!/" />

|

||||

</CLASSES>

|

||||

<JAVADOC />

|

||||

<SOURCES />

|

||||

</library>

|

||||

</component>

|

||||

9

.idea/modules.xml

generated

Normal file

|

|

@ -0,0 +1,9 @@

|

|||

<?xml version="1.0" encoding="UTF-8"?>

|

||||

<project version="4">

|

||||

<component name="ProjectModuleManager">

|

||||

<modules>

|

||||

<module fileurl="file://$PROJECT_DIR$/audio_service.iml" filepath="$PROJECT_DIR$/audio_service.iml" />

|

||||

<module fileurl="file://$PROJECT_DIR$/audio_service_android.iml" filepath="$PROJECT_DIR$/audio_service_android.iml" />

|

||||

</modules>

|

||||

</component>

|

||||

</project>

|

||||

6

.idea/runConfigurations/example_lib_main_dart.xml

generated

Normal file

|

|

@ -0,0 +1,6 @@

|

|||

<component name="ProjectRunConfigurationManager">

|

||||

<configuration default="false" name="example/lib/main.dart" type="FlutterRunConfigurationType" factoryName="Flutter">

|

||||

<option name="filePath" value="$PROJECT_DIR$/example/lib/main.dart" />

|

||||

<method />

|

||||

</configuration>

|

||||

</component>

|

||||

6

.idea/vcs.xml

generated

Normal file

|

|

@ -0,0 +1,6 @@

|

|||

<?xml version="1.0" encoding="UTF-8"?>

|

||||

<project version="4">

|

||||

<component name="VcsDirectoryMappings">

|

||||

<mapping directory="" vcs="Git" />

|

||||

</component>

|

||||

</project>

|

||||

67

.idea/workspace.xml

generated

Normal file

|

|

@ -0,0 +1,67 @@

|

|||

<?xml version="1.0" encoding="UTF-8"?>

|

||||

<project version="4">

|

||||

<component name="ChangeListManager">

|

||||

<list default="true" id="10fe4e03-808b-4cca-b552-b754ebc9fc8e" name="Default Changelist" comment="">

|

||||

<change beforePath="$PROJECT_DIR$/.idea/workspace.xml" beforeDir="false" afterPath="$PROJECT_DIR$/.idea/workspace.xml" afterDir="false" />

|

||||

<change beforePath="$PROJECT_DIR$/lib/audio_service.dart" beforeDir="false" afterPath="$PROJECT_DIR$/lib/audio_service.dart" afterDir="false" />

|

||||

</list>

|

||||

<option name="EXCLUDED_CONVERTED_TO_IGNORED" value="true" />

|

||||

<option name="SHOW_DIALOG" value="false" />

|

||||

<option name="HIGHLIGHT_CONFLICTS" value="true" />

|

||||

<option name="HIGHLIGHT_NON_ACTIVE_CHANGELIST" value="false" />

|

||||

<option name="LAST_RESOLUTION" value="IGNORE" />

|

||||

</component>

|

||||

<component name="ExecutionTargetManager" SELECTED_TARGET="Android10" />

|

||||

<component name="Git.Settings">

|

||||

<option name="RECENT_GIT_ROOT_PATH" value="$PROJECT_DIR$" />

|

||||

</component>

|

||||

<component name="IgnoredFileRootStore">

|

||||

<option name="generatedRoots">

|

||||

<set>

|

||||

<option value="$PROJECT_DIR$/.idea" />

|

||||

</set>

|

||||

</option>

|

||||

</component>

|

||||

<component name="ProjectId" id="1heOT4v7Yxgr9Nb2PRjB67yYpUz" />

|

||||

<component name="PropertiesComponent">

|

||||

<property name="dart.analysis.tool.window.force.activate" value="true" />

|

||||

<property name="last_opened_file_path" value="$PROJECT_DIR$" />

|

||||

<property name="show.migrate.to.gradle.popup" value="false" />

|

||||

</component>

|

||||

<component name="RunDashboard">

|

||||

<option name="ruleStates">

|

||||

<list>

|

||||

<RuleState>

|

||||

<option name="name" value="ConfigurationTypeDashboardGroupingRule" />

|

||||

</RuleState>

|

||||

<RuleState>

|

||||

<option name="name" value="StatusDashboardGroupingRule" />

|

||||

</RuleState>

|

||||

</list>

|

||||

</option>

|

||||

</component>

|

||||

<component name="SvnConfiguration">

|

||||

<configuration />

|

||||

</component>

|

||||

<component name="TaskManager">

|

||||

<task active="true" id="Default" summary="Default task">

|

||||

<changelist id="10fe4e03-808b-4cca-b552-b754ebc9fc8e" name="Default Changelist" comment="" />

|

||||

<created>1600368096000</created>

|

||||

<option name="number" value="Default" />

|

||||

<option name="presentableId" value="Default" />

|

||||

<updated>1600368096000</updated>

|

||||

</task>

|

||||

<servers />

|

||||

</component>

|

||||

<component name="Vcs.Log.Tabs.Properties">

|

||||

<option name="TAB_STATES">

|

||||

<map>

|

||||

<entry key="MAIN">

|

||||

<value>

|

||||

<State />

|

||||

</value>

|

||||

</entry>

|

||||

</map>

|

||||

</option>

|

||||

</component>

|

||||

</project>

|

||||

254

CHANGELOG.md

Normal file

|

|

@ -0,0 +1,254 @@

|

|||

## 0.15.0

|

||||

|

||||

* Web support (@keaganhilliard)

|

||||

* macOS support (@hacker1024)

|

||||

* Route next/previous buttons to onClick on Android (@stonega)

|

||||

* Correctly scale skip intervals for control center (@subhash279)

|

||||

* Handle repeated stop/start calls more robustly.

|

||||

* Fix Android 11 bugs.

|

||||

|

||||

## 0.14.1

|

||||

|

||||

* audio_session dependency now supports minSdkVersion 16 on Android.

|

||||

|

||||

## 0.14.0

|

||||

|

||||

* audio session management now handled by audio_session (see [Migration Guide](https://github.com/ryanheise/audio_service/wiki/Migration-Guide#0140)).

|

||||

* Exceptions in background audio task are logged and forwarded to client.

|

||||

|

||||

## 0.13.0

|

||||

|

||||

* All BackgroundAudioTask callbacks are now async.

|

||||

* Add default implementation of onSkipToNext/onSkipToPrevious.

|

||||

* Bug fixes.

|

||||

|

||||

## 0.12.0

|

||||

|

||||

* Add setRepeatMode/setShuffleMode.

|

||||

* Enable iOS Control Center buttons based on setState.

|

||||

* Support seek forward/backward in iOS Control Center.

|

||||

* Add default behaviour to BackgroundAudioTask.

|

||||

* Bug fixes.

|

||||

* Simplify example.

|

||||

|

||||

## 0.11.2

|

||||

|

||||

* Fix bug with album metadata on Android.

|

||||

|

||||

## 0.11.1

|

||||

|

||||

* Allow setting the iOS audio session category and options.

|

||||

* Allow AudioServiceWidget to recognise swipe gesture on iOS.

|

||||

* Check for null title and album on Android.

|

||||

|

||||

## 0.11.0

|

||||

|

||||

* Breaking change: onStop must await super.onStop to shutdown task.

|

||||

* Fix Android memory leak.

|

||||

|

||||

## 0.10.0

|

||||

|

||||

* Replace androidStopOnRemoveTask with onTaskRemoved callback.

|

||||

* Add onClose callback.

|

||||

* Breaking change: new MediaButtonReceiver in AndroidManifest.xml.

|

||||

|

||||

## 0.9.0

|

||||

|

||||

* New state model: split into playing + processingState.

|

||||

* androidStopForegroundOnPause ties foreground state to playing state.

|

||||

* Add MediaItem.toJson/fromJson.

|

||||

* Add AudioService.notificationClickEventStream (Android).

|

||||

* Add AudioService.updateMediaItem.

|

||||

* Add AudioService.setSpeed.

|

||||

* Add PlaybackState.bufferedPosition.

|

||||

* Add custom AudioService.start parameters.

|

||||

* Rename replaceQueue -> updateQueue.

|

||||

* Rename Android-specific start parameters with android- prefix.

|

||||

* Use Duration type for all time values.

|

||||

* Pass fastForward/rewind intervals through to background task.

|

||||

* Allow connections from background contexts (e.g. android_alarm_manager).

|

||||

* Unify iOS/Android focus APIs.

|

||||

* Bug fixes and dependency updates.

|

||||

|

||||

## 0.8.0

|

||||

|

||||

* Allow UI to await the result of custom actions.

|

||||

* Allow background to broadcast custom events to UI.

|

||||

* Improve memory management for art bitmaps on Android.

|

||||

* Convenience methods: replaceQueue, playMediaItem, addQueueItems.

|

||||

* Bug fixes and dependency updates.

|

||||

|

||||

## 0.7.2

|

||||

|

||||

* Shutdown background task if task killed by IO (Android).

|

||||

* Bug fixes and dependency updates.

|

||||

|

||||

## 0.7.1

|

||||

|

||||

* Add AudioServiceWidget to auto-manage connections.

|

||||

* Allow file URIs for artUri.

|

||||

|

||||

## 0.7.0

|

||||

|

||||

* Support skip forward/backward in command center (iOS).

|

||||

* Add 'extras' field to MediaItem.

|

||||

* Artwork caching and preloading supported on Android+iOS.

|

||||

* Bug fixes.

|

||||

|

||||

## 0.6.2

|

||||

|

||||

* Bug fixes.

|

||||

|

||||

## 0.6.1

|

||||

|

||||

* Option to stop service on closing task (Android).

|

||||

|

||||

## 0.6.0

|

||||

|

||||

* Migrated to V2 embedding API (Flutter 1.12).

|

||||

|

||||

## 0.5.7

|

||||

|

||||

* Destroy isolates after use.

|

||||

|

||||

## 0.5.6

|

||||

|

||||

* Support Flutter 1.12.

|

||||

|

||||

## 0.5.5

|

||||

|

||||

* Bump sdk version to 2.6.0.

|

||||

|

||||

## 0.5.4

|

||||

|

||||

* Fix Android memory leak.

|

||||

|

||||

## 0.5.3

|

||||

|

||||

* Support Queue, album art and other missing features on iOS.

|

||||

|

||||

## 0.5.2

|

||||

|

||||

* Update documentation and example.

|

||||

|

||||

## 0.5.1

|

||||

|

||||

* Playback state broadcast on connect (iOS).

|

||||

|

||||

## 0.5.0

|

||||

|

||||

* Partial iOS support.

|

||||

|

||||

## 0.4.2

|

||||

|

||||

* Option to call stopForeground on pause.

|

||||

|

||||

## 0.4.1

|

||||

|

||||

* Fix queue support bug

|

||||

|

||||

## 0.4.0

|

||||

|

||||

* Breaking change: AudioServiceBackground.run takes a single parameter.

|

||||

|

||||

## 0.3.1

|

||||

|

||||

* Update example to disconnect when pressing back button.

|

||||

|

||||

## 0.3.0

|

||||

|

||||

* Breaking change: updateTime now measured since epoch instead of boot time.

|

||||

|

||||

## 0.2.1

|

||||

|

||||

* Streams use RxDart BehaviorSubject.

|

||||

|

||||

## 0.2.0

|

||||

|

||||

* Migrate to AndroidX.

|

||||

|

||||

## 0.1.1

|

||||

|

||||

* Bump targetSdkVersion to 28

|

||||

* Clear client-side metadata and state on stop.

|

||||

|

||||

## 0.1.0

|

||||

|

||||

* onClick is now always called for media button clicks.

|

||||

* Option to set notifications as ongoing.

|

||||

|

||||

## 0.0.15

|

||||

|

||||

* Option to set subText in notification.

|

||||

* Support media item ratings

|

||||

|

||||

## 0.0.14

|

||||

|

||||

* Can update existing media items.

|

||||

* Can specify order of Android notification compact actions.

|

||||

* Bug fix with connect.

|

||||

|

||||

## 0.0.13

|

||||

|

||||

* Option to preload artwork.

|

||||

* Allow client to browse media items.

|

||||

|

||||

## 0.0.12

|

||||

|

||||

* More options to customise the notification content.

|

||||

|

||||

## 0.0.11

|

||||

|

||||

* Breaking API changes.

|

||||

* Connection callbacks replaced by a streams API.

|

||||

* AudioService properties for playbackState, currentMediaItem, queue.

|

||||

* Option to set Android notification channel description.

|

||||

* AudioService.customAction awaits completion of the action.

|

||||

|

||||

## 0.0.10

|

||||

|

||||

* Bug fixes with queue management.

|

||||

* AudioService.start completes when the background task is ready.

|

||||

|

||||

## 0.0.9

|

||||

|

||||

* Support queue management.

|

||||

|

||||

## 0.0.8

|

||||

|

||||

* Bug fix.

|

||||

|

||||

## 0.0.7

|

||||

|

||||

* onMediaChanged takes MediaItem parameter.

|

||||

* Support playFromMediaId, fastForward, rewind.

|

||||

|

||||

## 0.0.6

|

||||

|

||||

* All APIs address media items by String mediaId.

|

||||

|

||||

## 0.0.5

|

||||

|

||||

* Show media art in notification and lock screen.

|

||||

|

||||

## 0.0.4

|

||||

|

||||

* Support and example for playing TextToSpeech.

|

||||

* Click notification to launch UI.

|

||||

* More properties added to MediaItem.

|

||||

* Minor API changes.

|

||||

|

||||

## 0.0.3

|

||||

|

||||

* Pause now keeps background isolate running

|

||||

* Notification channel id is generated from package name

|

||||

* Updated example to use audioplayer plugin

|

||||

* Fixed media button handling

|

||||

|

||||

## 0.0.2

|

||||

|

||||

* Better connection handling.

|

||||

|

||||

## 0.0.1

|

||||

|

||||

* Initial release.

|

||||

21

LICENSE

Normal file

|

|

@ -0,0 +1,21 @@

|

|||

MIT License

|

||||

|

||||

Copyright (c) 2018-2020 Ryan Heise and the project contributors.

|

||||

|

||||

Permission is hereby granted, free of charge, to any person obtaining a copy

|

||||

of this software and associated documentation files (the "Software"), to deal

|

||||

in the Software without restriction, including without limitation the rights

|

||||

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

||||

copies of the Software, and to permit persons to whom the Software is

|

||||

furnished to do so, subject to the following conditions:

|

||||

|

||||

The above copyright notice and this permission notice shall be included in all

|

||||

copies or substantial portions of the Software.

|

||||

|

||||

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

||||

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

||||

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

||||

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

||||

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

||||

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

|

||||

SOFTWARE.

|

||||

269

README.md

Normal file

|

|

@ -0,0 +1,269 @@

|

|||

# audio_service

|

||||

|

||||

This plugin wraps around your existing audio code to allow it to run in the background or with the screen turned off, and allows your app to interact with headset buttons, the Android lock screen and notification, iOS control center, wearables and Android Auto. It is suitable for:

|

||||

|

||||

* Music players

|

||||

* Text-to-speech readers

|

||||

* Podcast players

|

||||

* Navigators

|

||||

* More!

|

||||

|

||||

## How does this plugin work?

|

||||

|

||||

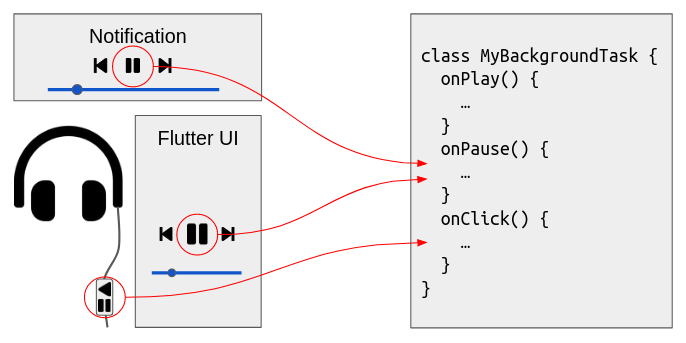

You encapsulate your audio code in a background task which runs in a special isolate that continues to run when your UI is absent. Your background task implements callbacks to respond to playback requests coming from your Flutter UI, headset buttons, the lock screen, notification, iOS control center, car displays and smart watches:

|

||||

|

||||

|

||||

|

||||

You can implement these callbacks to play any sort of audio that is appropriate for your app, such as music files or streams, audio assets, text to speech, synthesised audio, or combinations of these.

|

||||

|

||||

| Feature | Android | iOS | macOS | Web |

|

||||

| ------- | :-------: | :-----: | :-----: | :-----: |

|

||||

| background audio | ✅ | ✅ | ✅ | ✅ |

|

||||

| headset clicks | ✅ | ✅ | ✅ | ✅ |

|

||||

| start/stop/play/pause/seek/rate | ✅ | ✅ | ✅ | ✅ |

|

||||

| fast forward/rewind | ✅ | ✅ | ✅ | ✅ |

|

||||

| repeat/shuffle mode | ✅ | ✅ | ✅ | ✅ |

|

||||

| queue manipulation, skip next/prev | ✅ | ✅ | ✅ | ✅ |

|

||||

| custom actions | ✅ | ✅ | ✅ | ✅ |

|

||||

| custom events | ✅ | ✅ | ✅ | ✅ |

|

||||

| notifications/control center | ✅ | ✅ | ✅ | ✅ |

|

||||

| lock screen controls | ✅ | ✅ | | ✅ |

|

||||

| album art | ✅ | ✅ | ✅ | ✅ |

|

||||

| Android Auto, Apple CarPlay | (untested) | ✅ | | |

|

||||

|

||||

If you'd like to help with any missing features, please join us on the [GitHub issues page](https://github.com/ryanheise/audio_service/issues).

|

||||

|

||||

## Migrating to 0.14.0

|

||||

|

||||

Audio focus, interruptions (e.g. phone calls), mixing, ducking and the configuration of your app's audio category and attributes, are now handled by the [audio_session](https://pub.dev/packages/audio_session) package. Read the [Migration Guide](https://github.com/ryanheise/audio_service/wiki/Migration-Guide#0140) for details.

|

||||

|

||||

## Can I make use of other plugins within the background audio task?

|

||||

|

||||

Yes! `audio_service` is designed to let you implement the audio logic however you want, using whatever plugins you want. You can use your favourite audio plugins such as [just_audio](https://pub.dartlang.org/packages/just_audio), [flutter_radio](https://pub.dev/packages/flutter_radio), [flutter_tts](https://pub.dartlang.org/packages/flutter_tts), and others, within your background audio task. There are also plugins like [just_audio_service](https://github.com/yringler/just_audio_service) that provide default implementations of `BackgroundAudioTask` to make your job easier.

|

||||

|

||||

Note that this plugin will not work with other audio plugins that overlap in responsibility with this plugin (i.e. background audio, iOS control center, Android notifications, lock screen, headset buttons, etc.)

|

||||

|

||||

## Example

|

||||

|

||||

### Background code

|

||||

|

||||

Your audio code will run in a special background isolate, separate and detachable from your app's UI. To achieve this, define a subclass of `BackgroundAudioTask` that overrides a set of callbacks to respond to client requests:

|

||||

|

||||

```dart

|

||||

class MyBackgroundTask extends BackgroundAudioTask {

|

||||

// Initialise your audio task.

|

||||

onStart(Map<String, dynamic> params) {}

|

||||

// Handle a request to stop audio and finish the task.

|

||||

onStop() async {}

|

||||

// Handle a request to play audio.

|

||||

onPlay() {}

|

||||

// Handle a request to pause audio.

|

||||

onPause() {}

|

||||

// Handle a headset button click (play/pause, skip next/prev).

|

||||

onClick(MediaButton button) {}

|

||||

// Handle a request to skip to the next queue item.

|

||||

onSkipToNext() {}

|

||||

// Handle a request to skip to the previous queue item.

|

||||

onSkipToPrevious() {}

|

||||

// Handle a request to seek to a position.

|

||||

onSeekTo(Duration position) {}

|

||||

}

|

||||

```

|

||||

|

||||

You can implement these (and other) callbacks to play any type of audio depending on the requirements of your app. For example, if you are building a podcast player, you may have code such as the following:

|

||||

|

||||

```dart

|

||||

import 'package:just_audio/just_audio.dart';

|

||||

class PodcastBackgroundTask extends BackgroundAudioTask {

|

||||

AudioPlayer _player = AudioPlayer();

|

||||

onPlay() async {

|

||||

_player.play();

|

||||

// Show the media notification, and let all clients know what

|

||||

// playback state and media item to display.

|

||||

await AudioServiceBackground.setState(playing: true, ...);

|

||||

await AudioServiceBackground.setMediaItem(MediaItem(title: "Hey Jude", ...))

|

||||

}

|

||||

```

|

||||

|

||||

If you are instead building a text-to-speech reader, you may have code such as the following:

|

||||

|

||||

```dart

|

||||

import 'package:flutter_tts/flutter_tts.dart';

|

||||

class ReaderBackgroundTask extends BackgroundAudioTask {

|

||||

FlutterTts _tts = FlutterTts();

|

||||

String article;

|

||||

onPlay() async {

|

||||

_tts.speak(article);

|

||||

// Show the media notification, and let all clients know what

|

||||

// playback state and media item to display.

|

||||

await AudioServiceBackground.setState(playing: true, ...);

|

||||

await AudioServiceBackground.setMediaItem(MediaItem(album: "Business Insider", ...))

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

There are several methods in the `AudioServiceBackground` class that are made available to your background audio task to allow it to communicate to clients outside the isolate, such as your Flutter UI (if present), the iOS control center, the Android notification and lock screen. These are:

|

||||

|

||||

* `AudioServiceBackground.setState` broadcasts the current playback state to all clients. This includes whether or not audio is playing, but also whether audio is buffering, the current playback position and buffer position, the current playback speed, and the set of audio controls that should be made available. When you broadcast this information to all clients, it allows them to update their user interfaces to show the appropriate set of buttons, and show the correct audio position on seek bars, for example. It is important for you to call this method whenever any of these pieces of state changes. You will typically want to call this method from your `onStart`, `onPlay`, `onPause`, `onSkipToNext`, `onSkipToPrevious` and `onStop` callbacks.

|

||||

* `AudioServiceBackground.setMediaItem` broadcasts the currently playing media item to all clients. This includes the track title, artist, genre, duration, any artwork to display, and other information. When you broadcast this information to all clients, it allows them to update their user interface accordingly so that it is displayed on the lock screen, the notification, and in your Flutter UI (if present). You will typically want to call this method from your `onStart`, `onSkipToNext` and `onSkipToPrevious` callbacks.

|

||||

* `AudioServiceBackground.setQueue` broadcasts the current queue to all clients. Some clients like Android Auto may display this information in their user interfaces. You will typically want to call this method from your `onStart` callback. Other callbacks exist where it may be appropriate to call this method such as `onAddQueueItem` and `onRemoveQueueItem`.

|

||||

|

||||

### UI code

|

||||

|

||||

Connecting to `AudioService`:

|

||||

|

||||

```dart

|

||||

// Wrap your "/" route's widget tree in an AudioServiceWidget:

|

||||

return MaterialApp(

|

||||

home: AudioServiceWidget(MainScreen()),

|

||||

);

|

||||

```

|

||||

|

||||

Starting your background audio task:

|

||||

|

||||

```dart

|

||||

await AudioService.start(

|

||||

backgroundTaskEntrypoint: _myEntrypoint,

|

||||

androidNotificationIcon: 'mipmap/ic_launcher',

|

||||

// An example of passing custom parameters.

|

||||

// These will be passed through to your `onStart` callback.

|

||||

params: {'url': 'https://somewhere.com/sometrack.mp3'},

|

||||

);

|

||||

// this must be a top-level function

|

||||

void _myEntrypoint() => AudioServiceBackground.run(() => MyBackgroundTask());

|

||||

```

|

||||

|

||||

Sending messages to it:

|

||||

|

||||

* `AudioService.play()`

|

||||

* `AudioService.pause()`

|

||||

* `AudioService.click()`

|

||||

* `AudioService.skipToNext()`

|

||||

* `AudioService.skipToPrevious()`

|

||||

* `AudioService.seekTo(Duration(seconds: 53))`

|

||||

|

||||

Shutting it down:

|

||||

|

||||

```dart

|

||||

// This will pass through to your `onStop` callback.

|

||||

AudioService.stop();

|

||||

```

|

||||

|

||||

Reacting to state changes:

|

||||

|

||||

* `AudioService.playbackStateStream` (e.g. playing/paused, buffering/ready)

|

||||

* `AudioService.currentMediaItemStream` (metadata about the currently playing media item)

|

||||

* `AudioService.queueStream` (the current queue/playlist)

|

||||

|

||||

Keep in mind that your UI and background task run in separate isolates and do not share memory. The only way they communicate is via message passing. Your Flutter UI will only use the `AudioService` API to communicate with the background task, while your background task will only use the `AudioServiceBackground` API to interact with the clients, which include the Flutter UI.

|

||||

|

||||

### Connecting to `AudioService` from the background

|

||||

|

||||

You can also send messages to your background audio task from another background callback (e.g. android_alarm_manager) by manually connecting to it:

|

||||

|

||||

```dart

|

||||

await AudioService.connect(); // Note: the "await" is necessary!

|

||||

AudioService.play();

|

||||

```

|

||||

|

||||

## Configuring the audio session

|

||||

|

||||

If your app uses audio, you should tell the operating system what kind of usage scenario your app has and how your app will interact with other audio apps on the device. Different audio apps often have unique requirements. For example, when a navigator app speaks driving instructions, a music player should duck its audio while a podcast player should pause its audio. Depending on which one of these three apps you are building, you will need to configure your app's audio settings and callbacks to appropriately handle these interactions.

|

||||

|

||||

Use the [audio_session](https://pub.dev/packages/audio_session) package to change the default audio session configuration for your app. E.g. for a podcast player, you may use:

|

||||

|

||||

```dart

|

||||

final session = await AudioSession.instance;

|

||||

await session.configure(AudioSessionConfiguration.speech());

|

||||

```

|

||||

|

||||

Each time you invoke an audio plugin to play audio, that plugin will activate your app's shared audio session to inform the operating system that your app is actively playing audio. Depending on the configuration set above, this will also inform other audio apps to either stop playing audio, or possibly continue playing at a lower volume (i.e. ducking). You normally do not need to activate the audio session yourself, however if the audio plugin you use does not activate the audio session, you can activate it yourself:

|

||||

|

||||

```dart

|

||||

// Activate the audio session before playing audio.

|

||||

if (await session.setActive(true)) {

|

||||

// Now play audio.

|

||||

} else {

|

||||

// The request was denied and the app should not play audio

|

||||

}

|

||||

```

|

||||

|

||||

When another app activates its audio session, it similarly may ask your app to pause or duck its audio. Once again, the particular audio plugin you use may automatically pause or duck audio when requested. However, if it does not, you can respond to these events yourself by listening to `session.interruptionEventStream`. Similarly, if the audio plugin doesn't handle unplugged headphone events, you can respond to these yourself by listening to `session.becomingNoisyEventStream`. For more information, consult the documentation for [audio_session](https://pub.dev/packages/audio_session).

|

||||

|

||||

Note: If your app uses a number of different audio plugins, e.g. for audio recording, or text to speech, or background audio, it is possible that those plugins may internally override each other's audio session settings since there is only a single audio session shared by your app. Therefore, it is recommended that you apply your own preferred configuration using audio_session after all other audio plugins have loaded. You may consider asking the developer of each audio plugin you use to provide an option to not overwrite these global settings and allow them be managed externally.

|

||||

|

||||

## Android setup

|

||||

|

||||

These instructions assume that your project follows the new project template introduced in Flutter 1.12. If your project was created prior to 1.12 and uses the old project structure, you can update your project to follow the [new project template](https://github.com/flutter/flutter/wiki/Upgrading-pre-1.12-Android-projects).

|

||||

|

||||

Additionally:

|

||||

|

||||

1. Edit your project's `AndroidManifest.xml` file to declare the permission to create a wake lock, and add component entries for the `<service>` and `<receiver>`:

|

||||

|

||||

```xml

|

||||

<manifest ...>

|

||||

<uses-permission android:name="android.permission.WAKE_LOCK"/>

|

||||

<uses-permission android:name="android.permission.FOREGROUND_SERVICE"/>

|

||||

|

||||

<application ...>

|

||||

|

||||

...

|

||||

|

||||

<service android:name="com.ryanheise.audioservice.AudioService">

|

||||

<intent-filter>

|

||||

<action android:name="android.media.browse.MediaBrowserService" />

|

||||

</intent-filter>

|

||||

</service>

|

||||

|

||||

<receiver android:name="com.ryanheise.audioservice.MediaButtonReceiver" >

|

||||

<intent-filter>

|

||||

<action android:name="android.intent.action.MEDIA_BUTTON" />

|

||||

</intent-filter>

|

||||

</receiver>

|

||||

</application>

|

||||

</manifest>

|

||||

```

|

||||

|

||||

2. Starting from Flutter 1.12, you will need to disable the `shrinkResources` setting in your `android/app/build.gradle` file, otherwise the icon resources used in the Android notification will be removed during the build:

|

||||

|

||||

```

|

||||

android {

|

||||

compileSdkVersion 28

|

||||

|

||||

...

|

||||

|

||||

buildTypes {

|

||||

release {

|

||||

signingConfig ...

|

||||

shrinkResources false // ADD THIS LINE

|

||||

}

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

## iOS setup

|

||||

|

||||

Insert this in your `Info.plist` file:

|

||||

|

||||

```

|

||||

<key>UIBackgroundModes</key>

|

||||

<array>

|

||||

<string>audio</string>

|

||||

</array>

|

||||

```

|

||||

|

||||

The example project may be consulted for context.

|

||||

|

||||

## macOS setup

|

||||

The minimum supported macOS version is 10.12.2 (though this could be changed with some work in the future).

|

||||

Modify the platform line in `macos/Podfile` to look like the following:

|

||||

```

|

||||

platform :osx, '10.12.2'

|

||||

```

|

||||

|

||||

# Where can I find more information?

|

||||

|

||||

* [Tutorial](https://github.com/ryanheise/audio_service/wiki/Tutorial): walks you through building a simple audio player while explaining the basic concepts.

|

||||

* [Full example](https://github.com/ryanheise/audio_service/blob/master/example/lib/main.dart): The `example` subdirectory on GitHub demonstrates both music and text-to-speech use cases.

|

||||

* [Frequently Asked Questions](https://github.com/ryanheise/audio_service/wiki/FAQ)

|

||||

* [API documentation](https://pub.dev/documentation/audio_service/latest/audio_service/audio_service-library.html)

|

||||

8

android/.gitignore

vendored

Normal file

|

|

@ -0,0 +1,8 @@

|

|||

*.iml

|

||||

.gradle

|

||||

/local.properties

|

||||

/.idea/workspace.xml

|

||||

/.idea/libraries

|

||||

.DS_Store

|

||||

/build

|

||||

/captures

|

||||

39

android/build.gradle

Normal file

|

|

@ -0,0 +1,39 @@

|

|||

group 'com.ryanheise.audioservice'

|

||||

version '1.0-SNAPSHOT'

|

||||

|

||||

buildscript {

|

||||

repositories {

|

||||

google()

|

||||

jcenter()

|

||||

}

|

||||

|

||||

dependencies {

|

||||

classpath 'com.android.tools.build:gradle:3.5.0'

|

||||

}

|

||||

}

|

||||

|

||||

rootProject.allprojects {

|

||||

repositories {

|

||||

google()

|

||||

jcenter()

|

||||

}

|

||||

}

|

||||

|

||||

apply plugin: 'com.android.library'

|

||||

|

||||

android {

|

||||

compileSdkVersion 28

|

||||

|

||||

defaultConfig {

|

||||

minSdkVersion 16

|

||||

testInstrumentationRunner "androidx.test.runner.AndroidJUnitRunner"

|

||||

}

|

||||

lintOptions {

|

||||

disable 'InvalidPackage'

|

||||

}

|

||||

}

|

||||

|

||||

dependencies {

|

||||

implementation 'androidx.core:core:1.1.0'

|

||||

implementation 'androidx.media:media:1.1.0'

|

||||

}

|

||||

4

android/gradle.properties

Normal file

|

|

@ -0,0 +1,4 @@

|

|||

org.gradle.jvmargs=-Xmx1536M

|

||||

android.enableR8=true

|

||||

android.useAndroidX=true

|

||||

android.enableJetifier=true

|

||||

BIN

android/gradle/wrapper/gradle-wrapper.jar

vendored

Normal file

6

android/gradle/wrapper/gradle-wrapper.properties

vendored

Normal file

|

|

@ -0,0 +1,6 @@

|

|||

#Thu Sep 17 20:40:30 CEST 2020

|

||||

distributionBase=GRADLE_USER_HOME

|

||||

distributionPath=wrapper/dists

|

||||

zipStoreBase=GRADLE_USER_HOME

|

||||

zipStorePath=wrapper/dists

|

||||

distributionUrl=https\://services.gradle.org/distributions/gradle-5.6.4-all.zip

|

||||

172

android/gradlew

vendored

Normal file

|

|

@ -0,0 +1,172 @@

|

|||

#!/usr/bin/env sh

|

||||

|

||||

##############################################################################

|

||||

##

|

||||

## Gradle start up script for UN*X

|

||||

##

|

||||

##############################################################################

|

||||

|

||||

# Attempt to set APP_HOME

|

||||

# Resolve links: $0 may be a link

|

||||

PRG="$0"

|

||||

# Need this for relative symlinks.

|

||||

while [ -h "$PRG" ] ; do

|

||||

ls=`ls -ld "$PRG"`

|

||||

link=`expr "$ls" : '.*-> \(.*\)$'`

|

||||

if expr "$link" : '/.*' > /dev/null; then

|

||||

PRG="$link"

|

||||

else

|

||||

PRG=`dirname "$PRG"`"/$link"

|

||||

fi

|

||||

done

|

||||

SAVED="`pwd`"

|

||||

cd "`dirname \"$PRG\"`/" >/dev/null

|

||||

APP_HOME="`pwd -P`"

|

||||

cd "$SAVED" >/dev/null

|

||||

|

||||

APP_NAME="Gradle"

|

||||

APP_BASE_NAME=`basename "$0"`

|

||||

|

||||

# Add default JVM options here. You can also use JAVA_OPTS and GRADLE_OPTS to pass JVM options to this script.

|

||||

DEFAULT_JVM_OPTS=""

|

||||

|

||||

# Use the maximum available, or set MAX_FD != -1 to use that value.

|

||||

MAX_FD="maximum"

|

||||

|

||||

warn () {

|

||||

echo "$*"

|

||||

}

|

||||

|

||||

die () {

|

||||

echo

|

||||

echo "$*"

|

||||

echo

|

||||

exit 1

|

||||

}

|

||||

|

||||

# OS specific support (must be 'true' or 'false').

|

||||

cygwin=false

|

||||

msys=false

|

||||

darwin=false

|

||||

nonstop=false

|

||||

case "`uname`" in

|

||||

CYGWIN* )

|

||||

cygwin=true

|

||||

;;

|

||||

Darwin* )

|

||||

darwin=true

|

||||

;;

|

||||

MINGW* )

|

||||

msys=true

|

||||

;;

|

||||

NONSTOP* )

|

||||

nonstop=true

|

||||

;;

|

||||

esac

|

||||

|

||||

CLASSPATH=$APP_HOME/gradle/wrapper/gradle-wrapper.jar

|

||||

|

||||

# Determine the Java command to use to start the JVM.

|

||||

if [ -n "$JAVA_HOME" ] ; then

|

||||

if [ -x "$JAVA_HOME/jre/sh/java" ] ; then

|

||||

# IBM's JDK on AIX uses strange locations for the executables

|

||||

JAVACMD="$JAVA_HOME/jre/sh/java"

|

||||

else

|

||||

JAVACMD="$JAVA_HOME/bin/java"

|

||||

fi

|

||||

if [ ! -x "$JAVACMD" ] ; then

|

||||

die "ERROR: JAVA_HOME is set to an invalid directory: $JAVA_HOME

|

||||

|

||||

Please set the JAVA_HOME variable in your environment to match the

|

||||

location of your Java installation."

|

||||

fi

|

||||

else

|

||||

JAVACMD="java"

|

||||

which java >/dev/null 2>&1 || die "ERROR: JAVA_HOME is not set and no 'java' command could be found in your PATH.

|

||||

|

||||

Please set the JAVA_HOME variable in your environment to match the

|

||||

location of your Java installation."

|

||||

fi

|

||||

|

||||

# Increase the maximum file descriptors if we can.

|

||||

if [ "$cygwin" = "false" -a "$darwin" = "false" -a "$nonstop" = "false" ] ; then

|

||||

MAX_FD_LIMIT=`ulimit -H -n`

|

||||

if [ $? -eq 0 ] ; then

|

||||

if [ "$MAX_FD" = "maximum" -o "$MAX_FD" = "max" ] ; then

|

||||

MAX_FD="$MAX_FD_LIMIT"

|

||||

fi

|

||||

ulimit -n $MAX_FD

|

||||

if [ $? -ne 0 ] ; then

|

||||

warn "Could not set maximum file descriptor limit: $MAX_FD"

|

||||

fi

|

||||

else

|

||||

warn "Could not query maximum file descriptor limit: $MAX_FD_LIMIT"

|

||||

fi

|

||||

fi

|

||||

|

||||

# For Darwin, add options to specify how the application appears in the dock

|

||||

if $darwin; then

|

||||

GRADLE_OPTS="$GRADLE_OPTS \"-Xdock:name=$APP_NAME\" \"-Xdock:icon=$APP_HOME/media/gradle.icns\""

|

||||

fi

|

||||

|

||||

# For Cygwin, switch paths to Windows format before running java

|

||||

if $cygwin ; then

|

||||

APP_HOME=`cygpath --path --mixed "$APP_HOME"`

|

||||

CLASSPATH=`cygpath --path --mixed "$CLASSPATH"`

|

||||

JAVACMD=`cygpath --unix "$JAVACMD"`

|

||||

|

||||

# We build the pattern for arguments to be converted via cygpath

|

||||

ROOTDIRSRAW=`find -L / -maxdepth 1 -mindepth 1 -type d 2>/dev/null`

|

||||

SEP=""

|

||||

for dir in $ROOTDIRSRAW ; do

|

||||

ROOTDIRS="$ROOTDIRS$SEP$dir"

|

||||

SEP="|"

|

||||

done

|

||||

OURCYGPATTERN="(^($ROOTDIRS))"

|

||||

# Add a user-defined pattern to the cygpath arguments

|

||||

if [ "$GRADLE_CYGPATTERN" != "" ] ; then

|

||||

OURCYGPATTERN="$OURCYGPATTERN|($GRADLE_CYGPATTERN)"

|

||||

fi

|

||||

# Now convert the arguments - kludge to limit ourselves to /bin/sh

|

||||

i=0

|

||||

for arg in "$@" ; do

|

||||

CHECK=`echo "$arg"|egrep -c "$OURCYGPATTERN" -`

|

||||

CHECK2=`echo "$arg"|egrep -c "^-"` ### Determine if an option

|

||||

|

||||

if [ $CHECK -ne 0 ] && [ $CHECK2 -eq 0 ] ; then ### Added a condition

|

||||

eval `echo args$i`=`cygpath --path --ignore --mixed "$arg"`

|

||||

else

|

||||

eval `echo args$i`="\"$arg\""

|

||||

fi

|

||||

i=$((i+1))

|

||||

done

|

||||

case $i in

|

||||

(0) set -- ;;

|

||||

(1) set -- "$args0" ;;

|

||||

(2) set -- "$args0" "$args1" ;;

|

||||

(3) set -- "$args0" "$args1" "$args2" ;;

|

||||

(4) set -- "$args0" "$args1" "$args2" "$args3" ;;

|

||||

(5) set -- "$args0" "$args1" "$args2" "$args3" "$args4" ;;

|

||||

(6) set -- "$args0" "$args1" "$args2" "$args3" "$args4" "$args5" ;;

|

||||

(7) set -- "$args0" "$args1" "$args2" "$args3" "$args4" "$args5" "$args6" ;;

|

||||

(8) set -- "$args0" "$args1" "$args2" "$args3" "$args4" "$args5" "$args6" "$args7" ;;

|

||||

(9) set -- "$args0" "$args1" "$args2" "$args3" "$args4" "$args5" "$args6" "$args7" "$args8" ;;

|

||||

esac

|

||||

fi

|

||||

|

||||

# Escape application args

|

||||

save () {

|

||||

for i do printf %s\\n "$i" | sed "s/'/'\\\\''/g;1s/^/'/;\$s/\$/' \\\\/" ; done

|

||||

echo " "

|

||||

}

|

||||

APP_ARGS=$(save "$@")

|

||||

|

||||

# Collect all arguments for the java command, following the shell quoting and substitution rules

|

||||

eval set -- $DEFAULT_JVM_OPTS $JAVA_OPTS $GRADLE_OPTS "\"-Dorg.gradle.appname=$APP_BASE_NAME\"" -classpath "\"$CLASSPATH\"" org.gradle.wrapper.GradleWrapperMain "$APP_ARGS"

|

||||

|

||||

# by default we should be in the correct project dir, but when run from Finder on Mac, the cwd is wrong

|

||||

if [ "$(uname)" = "Darwin" ] && [ "$HOME" = "$PWD" ]; then

|

||||

cd "$(dirname "$0")"

|

||||

fi

|

||||

|

||||

exec "$JAVACMD" "$@"

|

||||

84

android/gradlew.bat

vendored

Normal file

|

|

@ -0,0 +1,84 @@

|

|||

@if "%DEBUG%" == "" @echo off

|

||||

@rem ##########################################################################

|

||||

@rem

|

||||

@rem Gradle startup script for Windows

|

||||

@rem

|

||||

@rem ##########################################################################

|

||||

|

||||

@rem Set local scope for the variables with windows NT shell

|

||||

if "%OS%"=="Windows_NT" setlocal

|

||||

|

||||

set DIRNAME=%~dp0

|

||||

if "%DIRNAME%" == "" set DIRNAME=.

|

||||

set APP_BASE_NAME=%~n0

|

||||

set APP_HOME=%DIRNAME%

|

||||

|

||||

@rem Add default JVM options here. You can also use JAVA_OPTS and GRADLE_OPTS to pass JVM options to this script.

|

||||

set DEFAULT_JVM_OPTS=

|

||||

|

||||

@rem Find java.exe

|

||||

if defined JAVA_HOME goto findJavaFromJavaHome

|

||||

|

||||

set JAVA_EXE=java.exe

|

||||

%JAVA_EXE% -version >NUL 2>&1

|

||||

if "%ERRORLEVEL%" == "0" goto init

|

||||

|

||||

echo.

|

||||

echo ERROR: JAVA_HOME is not set and no 'java' command could be found in your PATH.

|

||||

echo.

|

||||

echo Please set the JAVA_HOME variable in your environment to match the

|

||||

echo location of your Java installation.

|

||||

|

||||

goto fail

|

||||

|

||||

:findJavaFromJavaHome

|

||||

set JAVA_HOME=%JAVA_HOME:"=%

|

||||

set JAVA_EXE=%JAVA_HOME%/bin/java.exe

|

||||

|

||||

if exist "%JAVA_EXE%" goto init

|

||||

|

||||

echo.

|

||||

echo ERROR: JAVA_HOME is set to an invalid directory: %JAVA_HOME%

|

||||

echo.

|

||||

echo Please set the JAVA_HOME variable in your environment to match the

|

||||

echo location of your Java installation.

|

||||

|

||||

goto fail

|

||||

|

||||

:init

|

||||

@rem Get command-line arguments, handling Windows variants

|

||||

|

||||

if not "%OS%" == "Windows_NT" goto win9xME_args

|

||||

|

||||

:win9xME_args

|

||||

@rem Slurp the command line arguments.

|

||||

set CMD_LINE_ARGS=

|

||||

set _SKIP=2

|

||||

|

||||

:win9xME_args_slurp

|

||||

if "x%~1" == "x" goto execute

|

||||

|

||||

set CMD_LINE_ARGS=%*

|

||||

|

||||

:execute

|

||||

@rem Setup the command line

|

||||

|

||||

set CLASSPATH=%APP_HOME%\gradle\wrapper\gradle-wrapper.jar

|

||||

|

||||

@rem Execute Gradle

|

||||

"%JAVA_EXE%" %DEFAULT_JVM_OPTS% %JAVA_OPTS% %GRADLE_OPTS% "-Dorg.gradle.appname=%APP_BASE_NAME%" -classpath "%CLASSPATH%" org.gradle.wrapper.GradleWrapperMain %CMD_LINE_ARGS%

|

||||

|

||||

:end

|

||||

@rem End local scope for the variables with windows NT shell

|

||||

if "%ERRORLEVEL%"=="0" goto mainEnd

|

||||

|

||||

:fail

|

||||

rem Set variable GRADLE_EXIT_CONSOLE if you need the _script_ return code instead of

|

||||

rem the _cmd.exe /c_ return code!

|

||||

if not "" == "%GRADLE_EXIT_CONSOLE%" exit 1

|

||||

exit /b 1

|

||||

|

||||

:mainEnd

|

||||

if "%OS%"=="Windows_NT" endlocal

|

||||

|

||||

:omega

|

||||

1

android/settings.gradle

Normal file

|

|

@ -0,0 +1 @@

|

|||

rootProject.name = 'audio_service'

|

||||

3

android/src/main/AndroidManifest.xml

Normal file

|

|

@ -0,0 +1,3 @@

|

|||

<manifest xmlns:android="http://schemas.android.com/apk/res/android"

|

||||

package="com.ryanheise.audioservice">

|

||||

</manifest>

|

||||

|

|

@ -0,0 +1,8 @@

|

|||

package com.ryanheise.audioservice;

|

||||

|

||||

public enum AudioInterruption {

|

||||

pause,

|

||||

temporaryPause,

|

||||

temporaryDuck,

|

||||

unknownPause,

|

||||

}

|

||||

|

|

@ -0,0 +1,16 @@

|

|||

package com.ryanheise.audioservice;

|

||||

|

||||

public enum AudioProcessingState {

|

||||

none,

|

||||

connecting,

|

||||

ready,

|

||||

buffering,

|

||||

fastForwarding,

|

||||

rewinding,

|

||||

skippingToPrevious,

|

||||

skippingToNext,

|

||||

skippingToQueueItem,

|

||||

completed,

|

||||

stopped,

|

||||

error,

|

||||

}

|

||||

|

|

@ -0,0 +1,805 @@

|

|||

package com.ryanheise.audioservice;

|

||||

|

||||

import android.app.Activity;

|

||||

import android.app.Notification;

|

||||

import android.app.NotificationChannel;

|

||||

import android.app.NotificationManager;

|

||||

import android.app.PendingIntent;

|

||||

import android.content.BroadcastReceiver;

|

||||

import android.content.ComponentName;

|

||||

import android.content.Context;

|

||||

import android.content.Intent;

|

||||

import android.content.IntentFilter;

|

||||

import android.graphics.Bitmap;

|

||||

import android.graphics.BitmapFactory;

|

||||

import android.media.AudioAttributes;

|

||||

import android.media.AudioFocusRequest;

|

||||

import android.media.AudioManager;

|

||||

import android.media.MediaDescription;

|

||||

import android.media.MediaMetadata;

|

||||

import android.os.Build;

|

||||

import android.os.Bundle;

|

||||

import android.os.Handler;

|

||||

import android.os.Looper;

|

||||

import android.os.PowerManager;

|

||||

import android.support.v4.media.MediaBrowserCompat;

|

||||

import android.support.v4.media.MediaDescriptionCompat;

|

||||

import android.support.v4.media.MediaMetadataCompat;

|

||||

import android.support.v4.media.RatingCompat;

|

||||

import android.support.v4.media.session.MediaControllerCompat;

|

||||

import android.support.v4.media.session.MediaSessionCompat;

|

||||

import android.support.v4.media.session.PlaybackStateCompat;

|

||||